Opinion: It’s time to get serious about self-driving cars

Antonio Avenoso, Executive Director

A shorter version of this article was published as a letter in the Financial Times on 24 August 2016.

The recent death of a man driving a Tesla electric car in ‘Autopilot’ mode raises many questions for policymakers, vehicle manufacturers and the public at large.

Regarding the specific crash in question, an official investigation is underway and expected to report within 12 months. But several facts have already been made public. Firstly, the car was travelling above the speed limit. It is not clear why. With GPS data, regularly updated maps and speed sign recognition technology, automated vehicles should know the correct speed limits at all times, and not exceed them. Driver assistance systems should not assist in breaking the law.

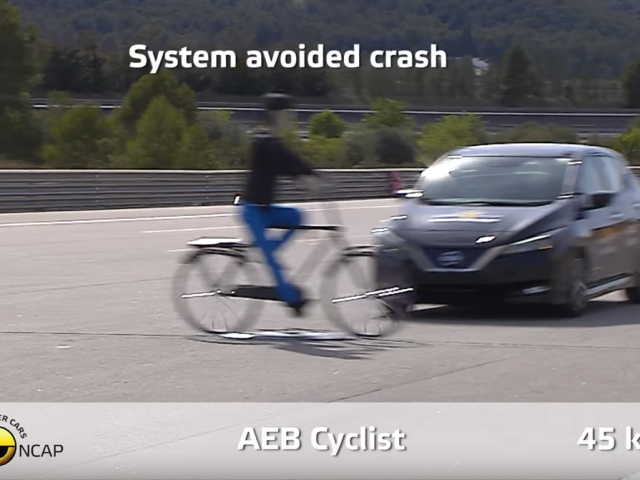

Secondly, the car was driving at high speed on a road where vehicles cross the highway in front of the vehicle. A precautionary approach would be to demand that automated systems be independently approved for use on a step-by-step basis, starting with the safest environments such as divided motorways without cross junctions or road works. An automated car should know what type of road it is on and what road features lie ahead. If it doesn’t, control should be given back to the driver.

Tesla tells drivers its software is in ‘beta’ and that it should be supervised at all times. But letting customers take a chance on a potentially lethal technology is not the way we regulate medicines, the same must be true for cars which have the added impact of killing other road users, not just the person taking the risk.

A white lorry crossing a highway on a sunny day in Florida is not a particularly unusual event. But only Tesla knows what scenarios its cars are designed to recognise. Independent checks, or ‘driving tests’ for automated systems and self-driving vehicles must be a pre-requisite for their use. If that means a strictly limited set of scenarios for several years, so be it. Many countries have graduated driving licenses for novice drivers – we should treat automated systems with the same caution.

Following the Florida crash, the American authorities launched a full and admirably transparent investigation. The speed and location of the crash are already known and a full list of questions sent to Tesla has been published by the regulator with a clear deadline for a response. Europe has no equivalent central body or process.

One thing is becoming crystal clear: neither the US nor European regulatory system is set up to deal with the challenge of approving these technologies for public use. Administrations designed to check that vehicles offer structural occupant protection in the event of a crash, decent headlights and powerful enough brakes are not yet ready to independently verify whether millions of lines of computer code will effectively protect drivers and other road users as cars increasingly drive themselves. Too much emphasis is on tests carried out by the manufacturer, the results of which are usually closely guarded secrets.

In Europe, Tesla’s Model S was approved by the Dutch regulatory body for new vehicles. The new Mercedes-Benz E-class, which also features a number of automated technologies, was approved by the German regulator. But a crash would be investigated in the country where it took place. The Volkswagen emissions scandal has highlighted the need for independent oversight of the national regulators – automated cars make this ever more urgent.

It is unclear how software updates, such as those regularly issued ‘over the air’ by Tesla are checked and by whom. The public will demand that ‘bugs’ that can cause a serious or even fatal crash are fixed as soon as possible, but operating system updates in the sphere of mobile phones and computers have been known to create other, unforeseen problems. With millions of potential combinations of components, vehicles and software updates, the job of ensuring cars are safe, and remain so, will not be a small one. Most recalls in Europe, even for safety problems, are voluntary, meaning that owners are encouraged, but not obliged to get them fixed. Do we want unpatched self-driving vehicles on our roads?

Tesla’s CEO says that automated cars will save millions of lives. Certainly the potential is undeniable. The eradication of speeding, drink driving, distraction and fatigue could revolutionise road safety. But there are also new risks in the short term. Clearly some drivers are already becoming too reliant on systems that aren’t ready nor able to take on all the complexities of the driving task.

To rush in risks putting the public off altogether. In Europe the public has become largely numb to the 500 deaths that occur on our roads every week. Terrorism, plane crashes and illegal drug overdoses create much more fear, despite killing a much smaller proportion of the population. The fear of being killed by your own car, even if the risks are smaller than driving yourself, could put many people off.

If a self-driving car killed a large number of people in a single collision, it could destroy public confidence in the technology. That’s why a precautionary and step-by-step approach is needed, along with an overhaul of the regulatory system for new vehicles. Governments across the world are racing to welcome self-driving cars to their roads; they would be wiser to take it slow.